I created the following lovable.dev applications which should be compiled and hosted in the KI-Léierbud Plesk Server :

ki-leierbud-navigation

Link : https://ki-leierbud-navigation.lovable.app/

This app is used to access wordpress posts related to navigation (TOCs, …) in the KI-Léierbud portal with REST API.

The ID of a navigation wordpress post is appended with ?p=ID to the URL of the app.

Example : https://ki-leierbud-navigation.lovable.app/?p=12

ki-leierbud-knowledge

Link : https://ki-leierbud-knowledge.lovable.app/

This app is used to access wordpress posts related to knowledge in the KI-Léierbud portal with REST API.

The ID of the first wordpress post related to knowledge in a specific category is appended with ?p=ID to the URL of the app. Surfing through posts in the same category can de done by swiping or with forward and backward buttons. Language switching can be done with a language selector in the header of the webpage. Only the languages where a translation is available are shown in the language list.

When a link is clicked to another post in the portal with the current language settings ?lang=xx and the related post is not available in that language, the post with the next language in the priority list is fetched.

Examples :

To do :

- add management to show author in the footer

- define design with a palette of background colors

- check the correct navigation

- integrate the app into the subdomain knowledge.ki-leierbud.lu

ki-leierbud-quiz

Link : https://ki-leierbud-quiz.lovable.app/

This app is used to access wordpress posts related to quizzes in the KI-Léierbud portal with REST API.

The ID of the first wordpress post related to a specific quiz in a specific category is appended with ?p=ID to the URL of the app. Surfing through a quiz (posts in the same category) can de done by swiping or with forward and backward buttons. Language switching works similar as in the knowledge app.

A selection list with multiple answers is shown in the header of the quiz-page. The image or page number of a quiz is displayed in superposition on each page, the selected answer to a quiz-question is shown in superposition at the bottom of the page. The button to submit the quiz is only enabled if all the questions have been answered. After the submission a evaluation page with the results is returned to the user.

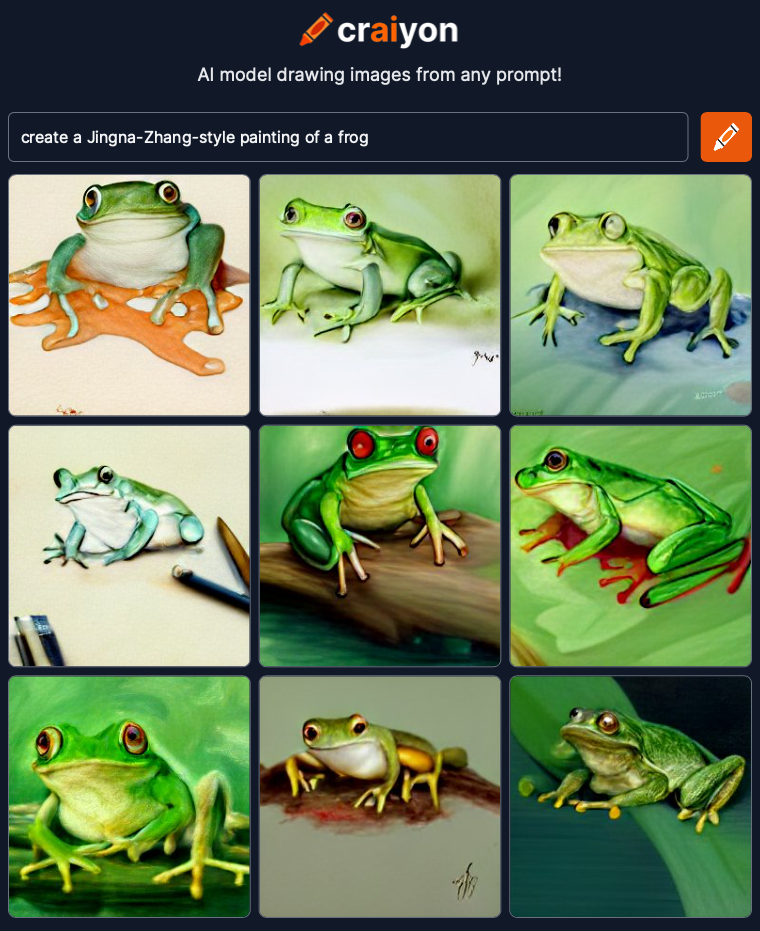

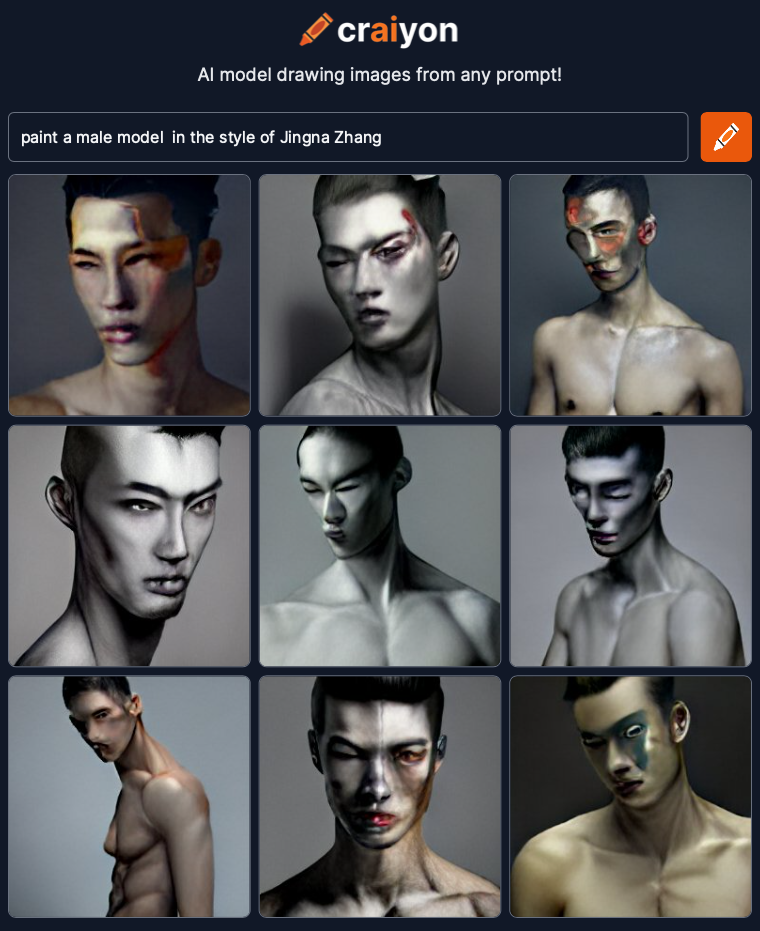

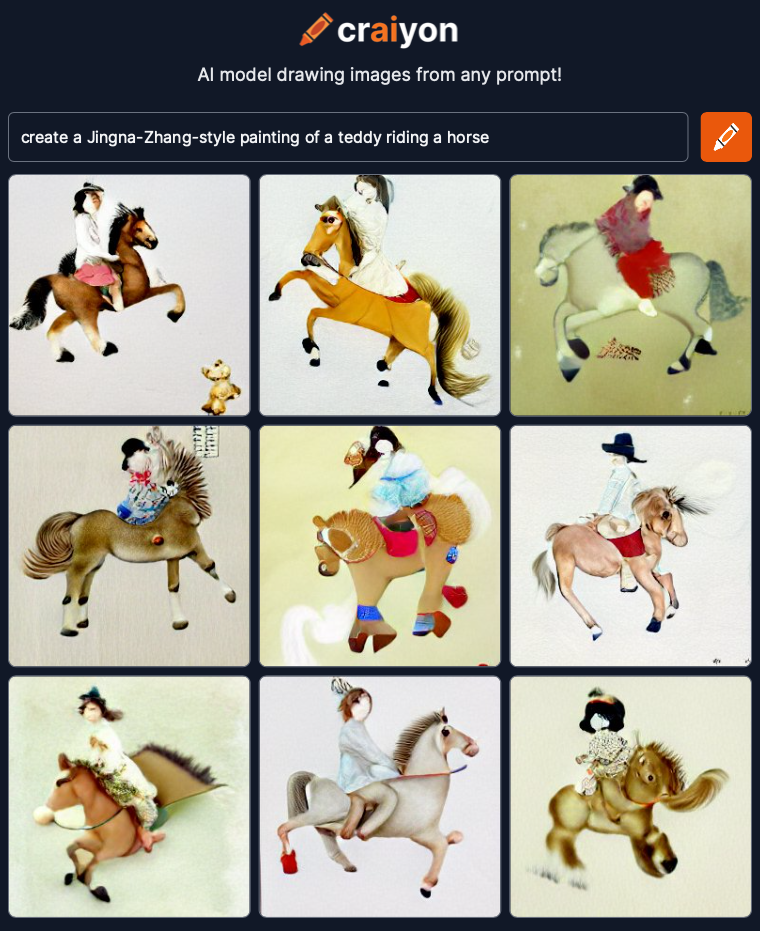

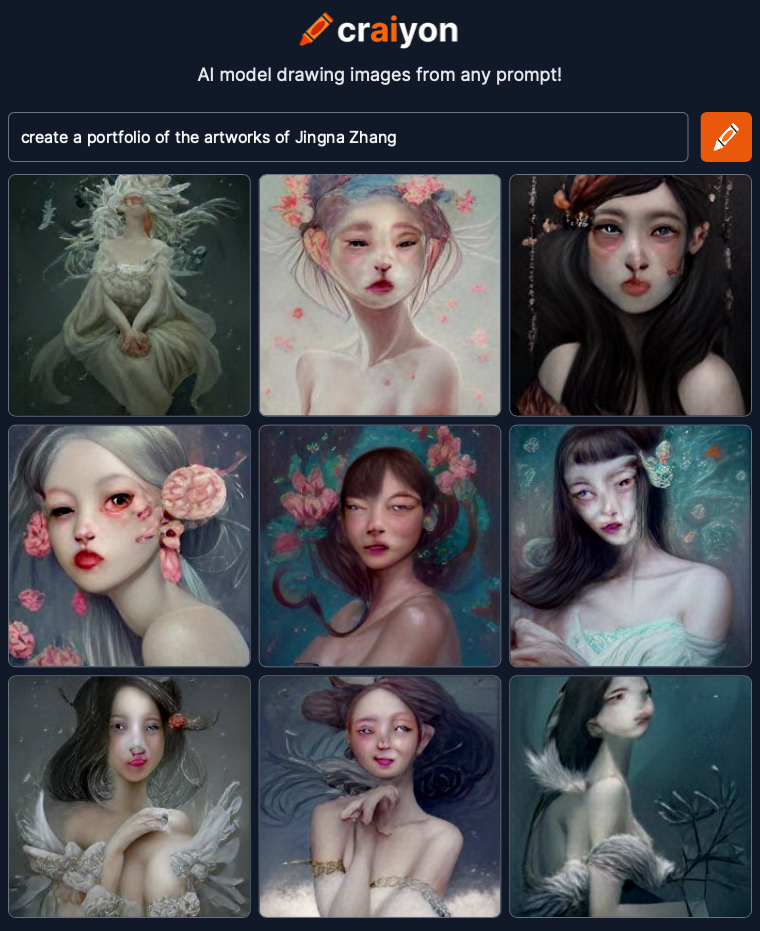

Examples of Image Style Quizzes :

To do :

- select the final images

- define design with a palette of background colors

- check the correct navigation

- integrate the app into the subdomain image-quiz.ki-leierbud.lu

ki-leierbud-polling

Links :

This example app is used to access wordpress posts related to pollings about emotions in the quiz section of the KI-Léierbud portal with REST API.

The ID of the first wordpress post related to a specific polling in a specific category is appended with ?p=ID to the URL of the app. Surfing through a polling (posts in the same category) can de done by swiping or with forward and backward buttons. Language switching works similar as in the knowledge app.

A selection list with multiple emotions is shown in the header of the quiz-page. The image or page number of a polling is displayed in superposition on each page, the selected answer to a polling-question is shown in superposition at the bottom of the page. The button to submit the polling is only enabled if all the questions have been answered.

The emotions selected by a user are stored inside a specific database created in the Plesk server. After the submission an evaluation page with the results is returned to the user showing a comparison with emotions selected by other users.

Examples of pollings :

To do :

- select the final images and videos

- select the final emotions

- define design with a palette of background colors

- check the correct navigation

- integrate the app into the subdomains image-polling.ki-leierbud.lu and video-polling.ki-leierbud.lu.